Lack of cross-jurisdiction billing costing millions

September 25, 2023

PR: From Zero to Hero

October 17, 2024Role of GPU and CPU in Generative AI use cases for scalable enterprise applications.

Originally posted on The Fast Mode on October 4, 2023.

In a candid admission to the US Senate, OpenAI's CEO, Sam Altman, highlighted a significant challenge for companies wanting to integrate AI technology: a severe graphics processing unit (GPU) shortage. Altman’s own words, "We're so short on GPUs that the fewer people who use the tool, the better." This raises a vital question: Are we overlooking the potential of central processing units (CPUs) in generative AI? Additionally, do all companies truly need the full range of capabilities offered by large language models (LLMs) like ChatGPT, which have billions of parameters?

GPUs and GenAI seem like a perfect match

Initially designed for rendering graphics in video games and animations, GPUs soon found a new role in supporting artificial intelligence (AI) tasks. GPUs possess an inherent ability to divide large tasks into smaller ones and execute them in parallel. This capability, known as parallel computing, enables GPUs to handle thousands of tasks simultaneously. This characteristic makes GPUs especially suited for applications in deep learning and reinforcement learning, such as facial recognition, object identification within videos, and critical autonomous driving functions like recognizing stop signs and navigating obstacles in autonomous vehicles.

LLMs like GPT-3 and GPT-4, which are essential for AI applications such as virtual assistants or chatbots, are intricate models containing billions of parameters. Fine-tuning these parameters demands processing vast datasets at high speeds. In this context, GPUs play a pivotal role. They not only accelerate complex training phases, including forward and backward propagation, but their proficiency in parallel processing ensures that these massive LLMs are continually optimized and updated efficiently.

A Perfect Storm: The long roots of the GPU shortage

The introduction of OpenAI's ChatGPT last year ignited a surge of interest in AI and amplified the immense capabilities of generative AI to the public. ChatGPT's ability to produce coherent, contextually relevant, and often indistinguishable-from-human text responses triggered a profound shift in public perception and acceptance of AI. As a result, there has been a rush across industries to integrate generative AI in every application and solution, and almost every startup is now claiming to be an AI company. This has led to an unprecedented demand for high-end GPUs. Despite manufacturers operating at full capacity, the supply cannot fulfill the growing demand. Several experts predict that this shortage may persist for a few years. Significant efforts are being made to explore alternatives to GPUs, including the use of ASICs, FPGAs, and CPUs.

Most companies have bypassed the need for large and fixed data centers by utilizing cloud computing services from leading providers such as Google, Microsoft, and Amazon. These services offer access to specialized AI chips and significant computational power with seemingly limitless scalability. However, with the newfound focus on AI, the wait times to acquire and use GPU chips have, in some instances, extended to a year. For companies willing to bypass the wait and pay a premium, the inflated costs often do not make financial sense from a return on investment (ROI) perspective. With the expanding potential in generative AI for most businesses, the GPU shortage is complicating their AI integration efforts, as they grapple with prolonged project timelines and difficulty in procuring necessary computational assets.

A recent New York Times article highlighted the extraordinary measures companies are taking to secure the compute they need for their AI-powered initiatives. As both affluent nations and top-tier corporations scramble for these resources, GPUs have become as sought-after as rare earth metals.

The cost of GPUs

The extraordinary capabilities of models like GPT-3, equipped with 175 billion parameters, come with an equally hefty price tag. With an estimated training expenditure of $4.6 million, GPT-3 stands as a testament to the rising costs of cutting-edge AI. Such prohibitive budgets have created a chasm in the AI world, placing state-of-the-art LLMs within the exclusive grasp of industry behemoths and affluent institutions in the Global North. Meanwhile, many startups, and entities without the luxury of supercomputers or substantial cloud credits find themselves sidelined.

An analyst told The Information publication that it might cost OpenAI about $700,000 a day to keep ChatGPT running because it uses high-end servers. This shows just how expensive it is to operate big AI models. The significant operational costs make many companies think twice about how they use AI and where they spend their money. The scale of computational prowess required for ChatGPT to effectively answer queries emphasizes the profound cost differentials in the GPU-driven AI landscape, challenging many enterprises to rethink their strategies and resource allocations. For the first time in history, many enterprises might need to redefine their business and operational strategies to take into account the arrival of GenAI driven capabilities that seemed to be, not a long time ago, a good number of years away.

Can CPUs be used for GenAI?

Both CPUs and GPUs have distinct computational strengths that play vital roles in generative AI. Designed as general-purpose processors, CPUs excel in handling diverse tasks, especially those that are sequential. They efficiently manage initial stages of AI processes such as data ingestion, cleaning, and basic processing. In fact, around 65%-70% of generative AI tasks, encompassing areas like MLOps and pipeline management, are driven by CPUs.

Even recent frameworks, like Semantic Kernel and LangChain, effectively utilize both CPUs and GPUs, aiming for optimal performance within controlled cost environments. The evolution in CPU technology, especially with features like deep learning acceleration has bolstered its place in AI, as highlighted by the successful training of models like Stable Diffusion (a deep learning, text-to-image model) and Hugging Face’s BERT model (designed to pre-train deep bidirectional representations from unlabeled text) on CPUs.

In the highly regulated legal industry, early adoption of AI centers around two pivotal metrics: the extent to which AI can automate a specific task and the inherent value derived from this automation. While generative AI and LLMs have only recently emerged as mainstays, Hercules has been deploying robust and secure on-prem AI solutions for enterprises for the past 5 years. These deployments include some of the highest regulated industries like legal services, transforming business processes for many enterprises in this vertical.

Ropers Majeski, an international law firm, exemplifies the practical use of CPUs in real-world generative AI applications. To streamline essential tasks like document and email filing, data retrieval, and report creation across their global offices, Ropers Majeski sought to overcome manual inefficiencies that wasted time and introduced errors. They turned to Hercules for an AI solution that could adhere to rigorous regulatory compliance while safeguarding sensitive data from potential external exposures. Together with Intel, Hercules introduced their generative AI application to Ropers Majeski, eliminating the dependence on GPUs. This solution capitalized on the strength of Hercules, Hercules proprietary orchestration and operating AI engine, and seamlessly integrated it with Intel’s 4th Gen Intel Xeon scalable processors. The outcome? A striking 18.5% boost in attorney productivity at Ropers Majeski, equating to a timesaving of 75 minutes per user each day, accompanied by enhanced data security and stringent compliance measures.

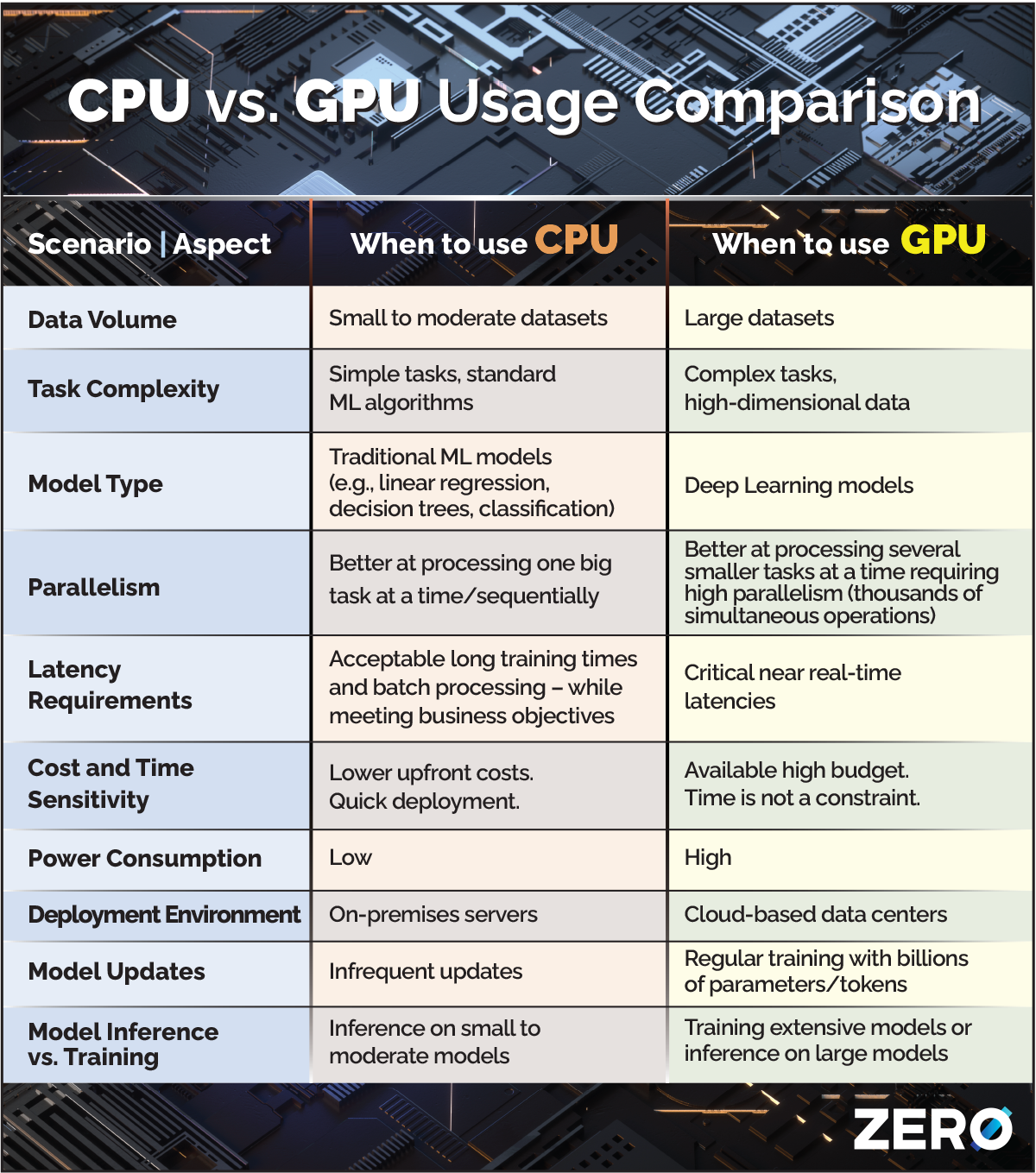

When to use a CPU vs. a GPU?

There is little doubt that GPUs have become essential in the age of AI and with no signs of slowing down. While GPUs have become indispensable, CPUs are often overlooked as an alternative for companies that need a GPU substitute for inferencing. The decision between CPUs and GPUs is not strictly an either-or one. The following table offers a breakdown, comparing scenarios and applications where each processing unit excels:

GPUs excel when processing extensive machine learning datasets using tensors. In contrast, CPUs emerge as a cost-effective alternative in situations where the power of GPUs might be overkill.

What’s the best solution for enterprises looking to deploy GenAI solutions today?

Navigating the generative AI ecosystem requires informed decisions on computational choices and requirements. These decisions must consider both operational and application needs in a balanced and well-articulated architecture. While GPUs have been the go-to chips for data-intensive tasks, the capabilities and flexibility of modern CPUs should not be underestimated. The distinction between these two affects not only the hardware selection but also the broader strategy, impacting deployment efficiency and return on investment.

Beyond computational power, the holistic architecture involving networking, memory, and the ability to group compute in clusters is vital. The optimal approach might involve harnessing the strengths of both GPUs and CPUs for AI training, while capitalizing on CPUs for efficient inference.

In today’s age, enterprises, conscious of escalating AI operational costs and power efficiency, need to consider the economic benefits of leveraging CPUs, especially for specific inference tasks and application architecture environments. Although CPUs might not always replace the role of GPUs in training large language models, they present a promising avenue for cost-effective deployment of pre-trained models. Embracing this dynamic not only stands to make generative AI more accessible but also challenges CPU manufacturers to foster strategic alliances, target research and development, and ensure seamless integration with the current AI stack, from data to inference and back.

As enterprises pursue generative AI solutions that blend cost-effectiveness with high performance, it's important to consider solutions like Hercules. They combine CPU hardware and generative AI architecture designed to optimize return on investment for customers.

About Catalin Vieru

Catalin is the Head of Enterprise Architecture at Hercules, where he leads the development and operation of end-to-end AI platforms and solutions that drive business value in fast-paced technology and GenAI environments.

Hercules is a generative AI company that brings the power of large language models (LLM) inside the security perimeter of enterprises.

Our AI applications augment knowledge workers, resulting in higher revenue, improved profitability, and enhanced worker satisfaction.